“From a responsibility point of view, I think open source AI is the future”

Databricks joined CTech to discuss LLMs and how they can be harnessed safely in the future

There have been many discussions about the regulation of AI following the explosive popularity of OpenAI’s ChatGPT and the consequences of unleashing such a powerful technology on the world. British Prime Minister Rishi Sunak has promised to make the UK ‘geographical home’ of AI regulation during London Tech Conference, and the European Union isn’t far behind with its proposed EU AI Act, although the consensus appears to be suffering on how exactly regulations should be adopted across the bloc.

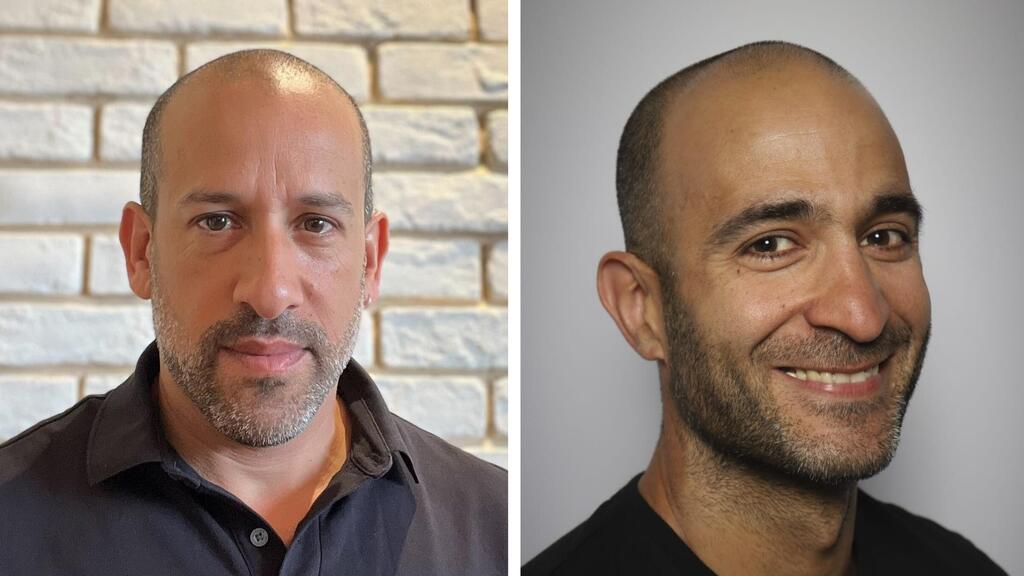

“From a regulation point of view, I think AI can provide extreme value, but on the other end, it can be misused. So we need to be really careful when we talk about regulation,” explained Shlomi Tubul, a Solutions Architect from the Israeli team at Databricks. “Obviously the regulation for specific use cases might be higher than creating a chatbot for companies. This is something that is really important and as we see it, since we are still at the beginning of it, we need to examine really carefully what we regulate and why.”

Governments have been quick to propose regulation to AI, with concerns only getting louder since OpenAI founder Sam Altman threatened to leave Europe entirely

if it became too difficult to comply with some of the proposed laws on AI. Altman has quickly garnered global fame due to ChatGPT, but questions have also been raised on the transparency and safety of the tool since its algorithms and processes are hidden from public scrutiny.

“Just like when GDPR came out to protect people and the misuse of data being collected, the regulation around ChatGPT is now forming as well,” added Lior Tzabari, Databricks Country Manager in Israel. “The fact that probably you will need to prove why you recommended something, and you will need to explain how the algorithm built this recommendation. If you're working with some kind of an IP around ChatGPT technology, it is very difficult to explain why the model took this decision and advised on that.”

ChatGPT is not open source, which means the 900 million people who use it each month for research or education currently have no idea how or why it draws the conclusions it does, or why it recommends certain things over others. Databricks, which recently expanded in Israel and opened a Tel Aviv office, has launched an alternative to ChatCPT called Dolly 2.0 which claims to be the first open, instruction-following LLM for commercial use.

“If you have an open source solution it is very much in line with the regulation and you can prove and explain why the model was taking this specific decision,” Tzabari continued. “It is much easier to meet regulatory requirements.”

After purchasing Twitter for $44 billion, Elon Musk promised to make its algorithms open source, meaning that its users could better understand why they were seeing certain tweets with specific messaging, or whether some content was being ‘shadow-banned’ by hiding it or suppressing its reach.

It was a move promised to improve transparency with Twitter positioning itself as more honest than other online communities, but as of April 2023, many have questioned the effect it had on the user experience as a whole.

Related articles:

“The advantage of working with open source is that you build a community around something,” said Tzabari. “And you cannot compete with the power of the community that contributes to progress the product, the solution, to the things that they work on, to something that is closed-source.”

The fact that OpenAI is remaining closed-sourced might make it more difficult to build trust among users who wish to see why they see the things they do, what is being withheld, or what is being artificially elevated to promote commercial interests. Given that Microsoft has just invested $10 billion into the technology, it might remain that way even if it is in the interests of users and governments for it to be opened and scrutinized fairly.

“From a responsibility point of view, I think open source is the future,” said Tubul. “This means once we open source the model, every customer can take the model, scrutinize it, address potential issues, combine it with other advantages of the market, and basically create their own large-scale element.”

Dolly was developed inside Databricks and is trained on 12 billion parameters, compared to 175 billion used by ChatGPT 4. Customers will be able to hold onto their own proprietary data and “can take it, manipulate it for their own needs, and use it for their own business.”

AI regulation is still in its infancy regarding the public understanding of it, as well as how governments are responding to it. Given that transparency, privacy, and trust are qualities that are favored by the fast-regulating European Union, it might be time for companies to ensure their LLMs are as open and honest as possible.