January 28th is officially Data Privacy Day in Israel, Europe, North America, and Nigeria. The day commemorates the signing of Convention 108 in 1981, arguably the

first binding international treaty

that recognizes rights in the area of data privacy and data protection. In light of the increasing focus on these areas, some have even expanded the day to last the

entire final week of January.

Notably, the US Congressional resolution which created their first Privacy Day in 2008 didn’t bother to mention the Europeans who were the first to promote it through the Council of Europe, years earlier.

Regardless of whose idea this was, or whether it’s a week or simply a day, the overarching goal of this period is to raise awareness and to promote education regarding the importance and value of data privacy.

Coincidentally, Kimi is an upcoming film to be released early next month on HBO Max. The timely and apropos film is produced and written by David Koeppe (Jurassic Park, Spiderman & Mission Impossible) and directed by Academy Award winner Steven Soderbergh. The movie stars Zoë Kravitz as an agoraphobic transcriber for an Amazon Alexa-like device, the eponymous Kimi, that seems to record everything.

As far as can be determined from the

movie trailer,

Zoë’s character, Angela Childs, stumbles across a recording of what seems to be a premeditated murder, caught by one of the Kimi devices. Set during the current pandemic, Angela must navigate through Covid-19 restriction protestors to what we can only assume must be to help solve the murder.

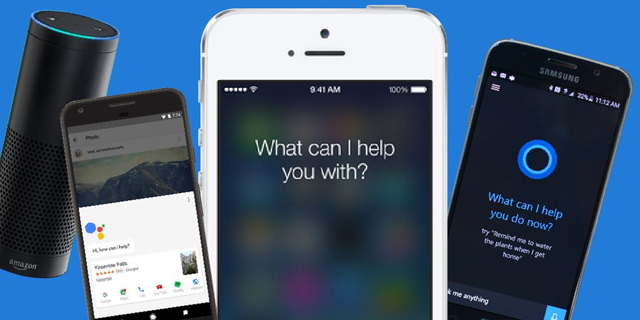

We are all familiar with real world Kimi-like devices such as Amazon’s Alexa and Apple’s Siri. These represent two popular but distinct applications of Artificial Intelligence (AI) personal assistants. Amazon’s is principally an AI that is typically implanted within a stationary object such as the Echo smart speaker, although Alexa is available as a phone application. In contrast, Apple’s Siri is closely associated with their iPhone and is intended to be available wherever the user is: less of music device and more of a generally unhelpful helpmate.

In the case of Alexa, the device employs AI tools such as natural language processing (NLP) to both understand the questions and requests posed by one of the millions of human customers, as well as providing the underlying AI with the ability to read and process online resources to answer the queries posed by said human.

These devices are designed to do more than simply play music or provide weather reports. They often interface with other smart devices within the home, acting as a central hub to control various different appliances, lights, security cameras and even a twerking bear. Yet, even with all of its applicability, it seems that

consumers have so far failed

to take advantage of all of the billions of dollars of research and development that Amazon pours into its devices through thousands of its workers.

Part of this lackluster level of interaction with these devices has been attributed to user concerns with regard to privacy. Initially it seemed that Amazon would strongly defended their users’ privacy when it refused to hand over Alexa recordings associated with a now defunct murder investigation, going so far as to argue that its

AI device had free speech rights

that provided cover for not cooperating with the police.

However, as the devices became more popular, it became apparent that not only was Amazon extensively recording conversations between customers and their devices, even in very

private health care settings,

but they also had human employees reviewing those interactions. And in at least one particularly egregious case, Amazon sent Alexa recordings mistakenly to a third party.

Amazon’s devices also create a host of privacy concerns through their connections with third-party applications and skills, or through interacting with often unencrypted home appliances over sometimes unsecured wifi. In the latter, this can result in malware infiltration, ID theft or phishing attacks, especially in jurisdictions that lack internet of things (IoT) protection laws like the recent ones now available in California and Oregon.

In one case,

it was shown that a hacker could easily interact with Alexa through a window to wreak havoc.

Unfortunately for consumers, AIs like Alexa are not just recording your sometimes insipid questions. They are also extracting actionable data from just the way you speak with Alexa, i.e., voiceprints and voice inferred information (VII), even reportedly from millions of children.

VII is extractable from simply your voice, regardless of the content of the speech itself. This is similar to the metadata collected from your phone calls. hHowever, VII can be especially informative as it can include your physical and mental health, your emotional state, your personality, even whether you are likely to default on a loan. Voiceprints have additional value beyond assessing the inner machinations of the mind, they are already extensively used as ID biomarkers by institutions like banks and brokerages. These records need to be particularly protected from malicious individuals.

With

voice collected

by smart speakers and the like able to provide AI algorithms with information regarding physical health, gender, mental health, age, personality, socioeconomic status, geographical origin, mood, body type and even intention to deceive, one has to wonder how this information is currently being protected from misuse and mishandling. Notably, it is not only these smart speakers that are recording our speech, but likely numerous other smart machines that are quickly filling up our homes.

The security and privacy of voice inferred information is currently underregulated. How so? While the underlying recorded voice itself may be subject to various biometric laws and their associated protections granted to consumers, it remains unclear as to whether the data that isn’t collected per se, but rather extracted from the voice by bespoke and proprietary algorithms, is also subject to the same sorts of rules and regulations.

Consider one of the most aggressive biometric laws in the United States, Illinois’ Biometric Information Privacy Act (BIPA). While that law has been used to support hundreds of class action law suits, even it remains limited in the level of protection it provides to consumers who have been subject to voice inferred information extraction. BIPA provides consumer protection for only voice biometric identifiers, but none of all the personal extractible information that was described earlier. Similarly, many of the innovative components of the expansive GDPR, the European data protection regime which has collected more than a

billion dollars in fines

just in the past year, are limited to privacy protections of data collected, not necessarily data created by the collectors.

Further, the new California Privacy Rights Act (CPRA) which goes into effect next January, but will have look-back provisions that are applicable beginning already in 2022, is similarly principally focused on data collected --such as the right to know about the data collected and the right to delete data collected-- but not information which was created from the collected data.

And with Amazon supposedly on the verge of doing what it does with voice also with

ultrasound and infrared data,

these aforementioned concerns are only going to multiply.

But just because a company may not have legal obligations vis-à-vis privacy, created by current laws or the incoming privacy regulations such as CPRA , VCDPA, or CPA that enforce things like transparency and informed consent to protect collected private information, these laws still create additional legal obligations to protect held personal data from outside hackers. Time will tell if this is enough protection.

In Europe, January 28th is known as Data Protection Day, not Data Privacy Day. That seemingly simple semantic distinction could make all the difference in the area of voice inferred information.

Prof. Dov Greenbaum is the director of the Zvi Meitar Institute for Legal Implications of Emerging Technologies at the Harry Radzyner Law School, at IDC Herzliya