Apple stakes its claim in the AI race, betting on privacy and personalization

Apple’s WWDC showcased a GenAI strategy prioritizing privacy and personalized intelligence. Leveraging its ecosystem and powerful processors, Apple aims to deliver unique AI solutions that stand out from competitors.

A lot of expectations hung on the opening event of Apple's annual developer conference (WWDC), held on Monday at the company's headquarters in Cupertino. It would not be an exaggeration to say that for Apple it was a significant event; a moment of make-or-break, at least in the field of generative artificial intelligence (GenAI). It was an event that would determine whether Apple joins the leading pack or remains at the back, watching Google, Meta, Microsoft, and OpenAI leave it in the dust.

When it was all over, after the announcements were made, after the executives had completed the presentation of the company's GenAI strategy, and Tim Cook said goodbye to the thousands who filled the plaza at the entrance to the company's headquarters (the traditional location of these announcements, the Steve Jobs Theater, was abandoned due to the large number of guests), it could be said with a certain degree of relief: Apple did it. They may not have jumped ahead of everyone, and they may not have placed themselves at the head of the pack, but they have done enough to become one of the leading players. Apple has introduced enough capabilities and exclusive features to provide differentiated and unique products, putting them in a good position to become a major player, possibly even more than that. This is, at least, at the level of promises and potential. The next essential question, the execution question, will only be answered in the coming weeks and months, when the capabilities introduced by Apple reach users.

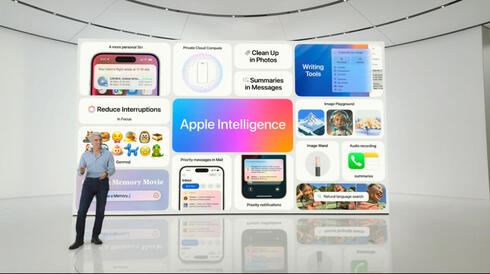

The launch event was divided almost equally into two parts. The first part presented a host of updates that will be integrated into the new versions of the operating systems for various Apple devices. As interesting as they were, the audience was really waiting for the second part: the unveiling of Apple's GenAI system. And it did come, with a lot of glittering promises.

In the last two years, we have already seen many systems and services based on models of GenAI, such as Dall-E, ChatGPT, Gemini, Grok, Llama, Copilot, and more. Apple's system, Apple Intelligence, arrives distinctly late and therefore needs to offer something significant to differentiate it from the others. Apple's late entry into the field did not allow it to introduce significant innovations in the capabilities of its model, so it leveraged a significant advantage: a large and varied user information base created and stored on the various devices it produces, and the possibility of leveraging this data in unique ways while maintaining user privacy.

Related articles:

"When we think about what is useful artificial intelligence, we need to focus on the user," said Apple's senior vice president of software engineering, Craig Federighi, in a meeting with reporters. "The goal is not to replace users, but to empower them. For this to happen, the intelligence needs to be aware of all your personal contexts and protect privacy. In a query like 'When do I have to leave work to make it to my daughter's play,' a traditional chatbot cannot know who your daughter is, what play it is, where you need to go, and your preferred mode of transportation. But the device in your pocket knows this. The ability to combine all these things is personal intelligence."

The system will allow Apple devices to create text and images based on understanding the user's personal context by leveraging the information stored on the various devices. Siri will be at the center of this system's capabilities. It will be possible to talk to Siri in natural language, and, for example, correct things that were said while giving a command (e.g., "sorry, I meant..."). Siri will also remember previous commands (e.g., if the user asked for information about a certain location, in the next command it would be possible to say "make a meeting there tomorrow at 11:00"). Siri will also be able to perform actions based on vague commands, such as "how can I write a message now and send it later?"

Siri will be aware of the user's personal context—emails received, links sent by friends, notes written, and more—and can use this information to perform actions. For example, finding a recommendation to tell your friend without having to specify when the recommendation was sent or in which application, or if you are asked to enter a driver's license number, Siri will be able to find a photo of the license, locate the number, and automatically enter it into the form. In a query like "Siri, when is my mother landing?", Siri will know how to find the information sent by your mother without needing to be told where and when it was sent, and display the result based on current landing information. Siri's new capabilities will be available on iPhone, iPad, and Mac.

In any application where text is written (email, word processor, etc.), you can ask the new system to rewrite messages according to the desired tone (e.g., make correspondence more professional, or even a song) or to make proofreading corrections. If you don't know how to respond to an email, the system will offer possible responses that are also adapted to past conversations. It will also be possible to receive summaries that present relevant information and requirements for action from email correspondence. In push notifications, there will be automatic prioritization of messages, with options such as reducing interruptions and intelligently displaying only important notifications.

The image creation capability of Apple Intelligence includes options such as creating new emojis based on a text command. For example, you can ask to create an emoji of smileys with slices of cucumbers over the eyes or create an emoji based on a friend's photo. An application called Image Wand can turn a rough diagram made in the notes application into a full illustration with a particularly professional background. Don't know what to paint? You can create a space just for the illustration, then use the wand to get a suggestion for a suitable illustration based on the text next to it.

When searching for images and videos, you can use natural language and find specific things, such as a vine riding a bicycle in a red shirt. Want to create a family clip that sums up an experience like a trip abroad? You can do this using a text command, where the result can be a clip with a narrative structure and appropriate music from Apple Music.

The true capabilities of the system will become clear only when it reaches widespread use, but it is already evident that there are areas where it falls short of other systems. For example, the ability to create images only allows you to create images in a diagram, animation, or anime style, and will not produce realistic images like those that other models can produce. Is it because Apple has not been able to create such a system that compares to others, or because it believes that even the capabilities that currently exist in the market are not of sufficient quality to give them the Apple mark of approval? At this point, it is unclear.

It is likely that in other areas as well, the capabilities of Apple's models are limited compared to the competitors. Therefore, it tries to compensate for this by implementing models from other companies, primarily OpenAI, within its system. For example, Siri will be able to connect to ChatGPT if it believes that some query needs assistance from this model, when the user only needs to confirm the sharing of information with OpenAI, and the result is presented organically as if it were presented by Apple's models. The use will be free without the need to open a ChatGPT account, but those who wish can connect an existing account and access the paid capabilities they have purchased. In the future, support will come for additional models from other companies.

To offset the weak points of its models, Apple leaned into the places where it is strong. First, the access to broad personal information of users. Apple users take photos, write, record, manage diaries, lists, correspondence, contacts, and more using its devices. Many times, they do this using the built-in applications on the device—email, photos, calendar, iMessage (mainly in the US), lists, reminders, and more. A large number of them use several of the company's devices, if not only devices manufactured by Apple.

This ecosystem gives Apple access to vast raw data (which can now, with modern models, be leveraged in ways that couldn't be done before), which can be used to optimize GenAI capabilities. Many other companies do not have similar access to this information or the ability to use it without the users feeling pressured and regulators roaring for fear of violating privacy. The OpenAI model may be more sophisticated, but it does not have access to the photos taken by the user, the list of their contacts, their correspondence with these contacts, and the possibility of successfully tying them together.

This approach allows Apple to offer much more personal capabilities that competitors are unable to provide. ChatGPT, for example, is unable to answer an almost abstract question like "find a photo of my mom from our vacation in Rome two years ago," because it doesn't know who my mom is, where there is a photo of her, or that I was even in Rome. And it's not that users will rush to provide access to such information. In the case of Apple, they do not need to provide access—all the information is already there on their devices, where Apple Intelligence also resides.

Apple can provide such access and capabilities only thanks to its absolute protection of user privacy. Many of these actions are performed on the device itself without sending information to the cloud. In the event that a larger model is needed that cannot be run from the device itself but from the cloud, Apple created a capability called Private Cloud Compute that allows the use of cloud-based models that run on dedicated servers created by Apple based on its own processors. If it is necessary to send information to the cloud, only the information relevant to the task is sent for processing on Apple's servers. The information is not stored on the servers after the operation is completed and is not accessible to Apple. External experts will be able to verify that the servers meet these requirements.

The company is a pioneer in the field of local processing and has offered such capabilities in other aspects in the past. Now, when the need for personal information is greater than ever, and so is the need to protect it, these capabilities become the spearhead of its AI attack and an advantage it can offer that the competition does not. Google may be able to enjoy similar access to information, but it cannot offer advanced privacy protections like Apple's, nor the necessary public trust, and it will have more difficulty offering GenAI systems that make such extensive use of personal information. The credit for the strong local processing capabilities, by the way, should go to Apple's senior Israeli, Johnny Srouji, who leads the development of its processors. These powerful processors, which are developed in part at the company's R&D center in Israel, are now its not-so-secret weapon in the AI war.

"Privacy from Apple's point of view is not an inhibiting factor, absolutely not," said John Giannandrea, Apple's senior vice president of Machine Learning and AI Strategy. "This is what allows us to process information on the device and offer all kinds of personal experiences."

The combination of access to relevant personal information while maintaining maximum privacy allows Apple to offer a GenAI system that really knows the user and understands the context in which they operate, not only in general but on an updated basis. This recognition largely masks a possible weakness of the model and also loads it with useful everyday applications that other companies are not yet able to provide, certainly not at this level. Their models may be stronger and more powerful, capable of more things and doing them better and faster, but at the level of actual use, Apple's system will be more useful.

It can be described as the difference between service in a hotel and a house helper who comes once a week. The hotel offers more services, cleans more often, and offers room service with the best dishes. The house helper comes once a week for a few hours. But they know exactly what you want to clean and how, and which dishes you like best. No need to ask, they already know you. This is what Apple currently offers, plus the possibility that one day, not so far away, this cleaner will also be able to offer services on the scale and frequency of a hotel, while still getting to know you.

This is not the end of the race; it is still impossible to declare that Apple has completed the move successfully—it still needs to launch the system and fulfill all its promises. But at least when it comes to the vision, when it comes to fundamentally cracking the field against the background of its limitations, Apple did it and showed that it is part of the race and on the way to becoming an important player.

The author is a guest of Apple at the developer conference.