After OpenAI’s wild year, three big questions linger about its future

As leadership changes and financial pressures mount, OpenAI must address key challenges to secure its future in AI dominance.

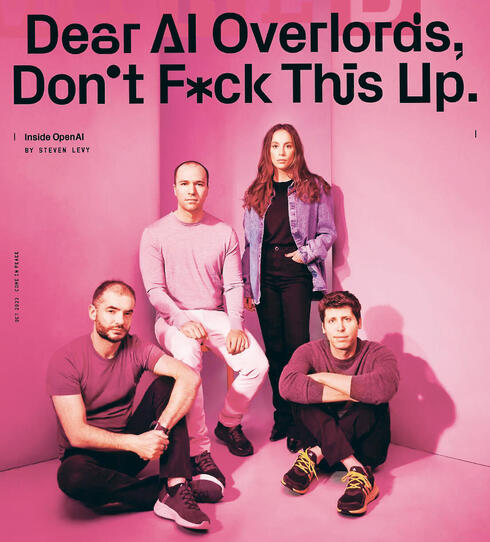

Exactly one year ago, OpenAI's winning team starred on the cover of the October edition of the technology magazine "Wired": CEO Sam Altman, Chief Science Officer Ilya Sutskever, VP of Technology Mira Murati and President Greg Brockman. "Dear AI Overloads, Don’t f***k this up," said the headline, not imagining that 12 months later Sutskever would resign and start a competing company, Altman would be fired and rehired, Murati would replace Altman as CEO for nine days only to resign months later, and Brockman would resign, return and then go on sabbatical. Although they were not in the picture, the co-founders John Schulman and Andrej Karpathy have also since resigned. This was just the beginning of one of the turbulent years that the technology company has known, with an amount of drama that could fill a lifetime.

One might expect that the exodus of founders, a restructuring of the business, numerous lawsuits, delayed product launches (such as Sora and GPT-5), and mounting financial losses would set off alarm bells for investors. Yet in 2024, as in the years before, nothing seems to stop the march of generative artificial intelligence. Last month, OpenAI raised $6.6 billion in funding, along with a $4 billion credit line, valuing the company at $157 billion. The investment came from major players like Softbank, Tiger Global, Microsoft, and Nvidia. While the influx of cash provides some breathing room for a company facing skyrocketing operating costs, it doesn’t guarantee future stability. Three key questions loom over OpenAI’s latest round of funding.

1. Who will lead the AI race?

In this massive fundraising effort, Altman set several conditions, including prohibiting investors from funding competing companies, at least as defined by Altman. Reportedly, this includes companies like Elon Musk's xAI, Anthropic, Sutskever's SSI, Perplexity, and Glean. For aggressive investors like SoftBank, known for funding both companies and their competitors, this is a significant limitation. Although these competitors are unlikely to struggle to raise funds, OpenAI’s stance signals its current mindset—oscillating between aggression and paranoia, and for good reason. Competition is fierce, and the margins are slim.

This isn’t the first time a tech company has used its dominance to shape market competition. In generative AI, where success relies on expensive data centers, funding is crucial for staying ahead. OpenAI knows this and is aware that the leader in this rapidly evolving field hasn’t yet been decided. While the company is undeniably central to the landscape, the market hasn’t consolidated, and products from companies like Meta and Google, though launched later, have gained significant traction. It’s now difficult to clearly differentiate between OpenAI’s GPT, Anthropic’s Claude, or Google’s Gemini. Even though some users have strong preferences for one model over the others, these distinctions often come down to personal taste rather than substantial differences.

GPT may be a successful and iconic product with 250 million weekly users and expected revenues of $2.7 billion this year, but it doesn’t dominate the AI service market in the way Google dominates search, Meta rules social media, or Apple controls app stores. Competitors like Meta, which missed the early AI trend by almost a year, are also thriving. In late August, Meta CEO Mark Zuckerberg announced that Llama 3 had 185 million weekly users—an impressive feat for a latecomer.

2. How will OpenAI control rising expenses?

In September, Altman published an ambitious manifesto outlining his vision for society and humanity, highlighting “incredible victories” OpenAI products would achieve. These include “solving climate change, establishing a space colony, and discovering all of physics.” Yes, “all of physics.” Altman, not a physicist himself, sees this as essential for tackling OpenAI’s biggest challenge: runaway expenses. The equation is simple—if OpenAI wants to stay ahead and maximize revenue, it must outpace competitors with superior products at more competitive prices. This requires training ever-larger models, in line with the philosophy that “bigger is better.” Unfortunately, larger models demand exponentially more computing power, driving up costs. OpenAI projects $11.6 billion in revenue next year but anticipates a $5 billion loss.

Generative AI is still in its infancy, and it’s unclear when—or if—OpenAI will launch a game-changing product. Altman envisions that product as a personal assistant capable of managing every email, text, and document in our lives. However, until that happens, OpenAI must face the current limitations of ChatGPT and the image generator DALL-E, both of which are popular but rooted in innovations from 2022. OpenAI’s financial situation is further complicated by its lack of a high-margin business, like Meta and Google’s advertising empires, to fund endless development. Altman has even turned to nuclear fusion, investing over $375 million in Helion Energy over the past three years. Helion aims to build a nuclear fusion reactor by 2028 and recently signed an agreement to supply energy to Microsoft, OpenAI’s largest investor—though many in the scientific community consider this timeline highly ambitious, if not impossible.

Related articles:

3. How will OpenAI address privacy concerns?

While OpenAI strives for breakthroughs in physics, the company urgently needs cash. In its latest funding round, OpenAI restructured as a for-profit company—a stark reversal from its founders’ original mission to ensure AI’s responsible development for the benefit of humanity. Now, profit comes first, with few constraints beyond what’s legally required. This shift has drawn criticism from Altman’s colleagues and played a role in last year’s attempted ousting, which led to a wave of departures from the company. History shows that when a company’s values deteriorate, it’s often the users who suffer.

OpenAI’s decline in values was already apparent when the company opposed California’s legislation to establish basic safety standards for AI models. This stands in stark contrast to its earlier claims of supporting regulation. Simultaneously, OpenAI has entered into partnerships with major publishers like “Time Magazine”, “Financial Times”, Axel Springer, “Le Monde”, and Condé Nast (which owns “Vogue”, “Vanity Fair”, and “The New Yorker”), giving it vast access to data. It also invested in a startup developing health-related software and expanded its cryptocurrency project, WorldCoin, which controversially involves scanning people’s eyeballs.

For a company that once declared its models would “deeply understand all issues, industries, cultures, and languages,” requiring “the broadest training sets possible,” its shift toward a profit-first model raises concerns about privacy and safety. OpenAI now has enormous incentives to prioritize data consolidation and surveillance, while sidelining questions of safety and user privacy.